Exploring Rulial Space

Last night I learned about Stephen Wolfram’s Concept of the Ruliad in a recent TED talk from a few weeks ago.

The ruliad represents the entire, interconnected web of possible states that arise from the fundamental rules governing a computational universe. As an observer on the inside of a ruliad, you can only observe the rules you are nested within.

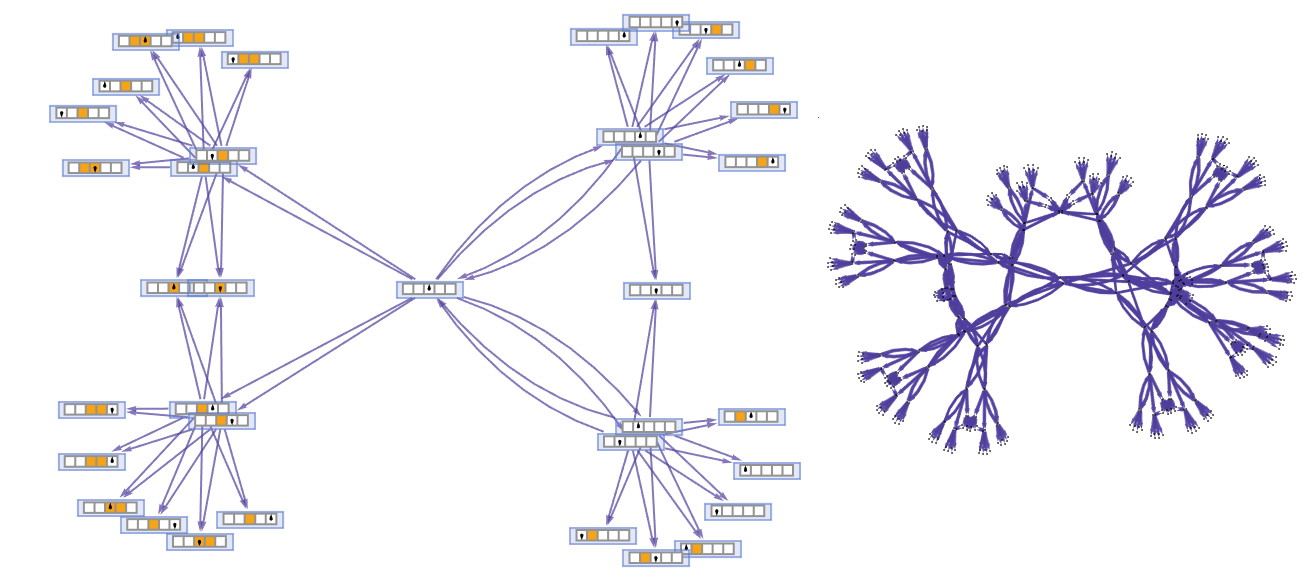

From the outside, you can visualize and traverse the Ruliad Space as an interconnected network of possible rules.

As an observer, or participant inside of a ruliad, you only ever sample very small slices of the rulial space. It is because we are observers of a specific place in rurial space that we perceive the laws of physics that we do; like general relativity, quantum mechanics, and statistical mechanics. So you can think of different minds being of different places in rurial space. Human minds who think alike are nearby, animals further away, and further out we get to “alien minds” where it’s hard to make a meaningful translation.

I am absolutely fascinated by the idea of rulial space, and how alien minds and potential insights lie just outside of our perception. Next I’m going to talk about a few other relatively recent inspiring projects and experiments I’ve seen. Then I’ll conclude by weaving all of these threads into one.

Experiments with the Stable Diffusion latent space.

In September 2023, I started talking with my friend Jesse about exploring the latent space of Stable Diffusion. He’s got lots of whacky ideas, but I thought it would be worth sharing some of the more concrete examples/demos he put together.

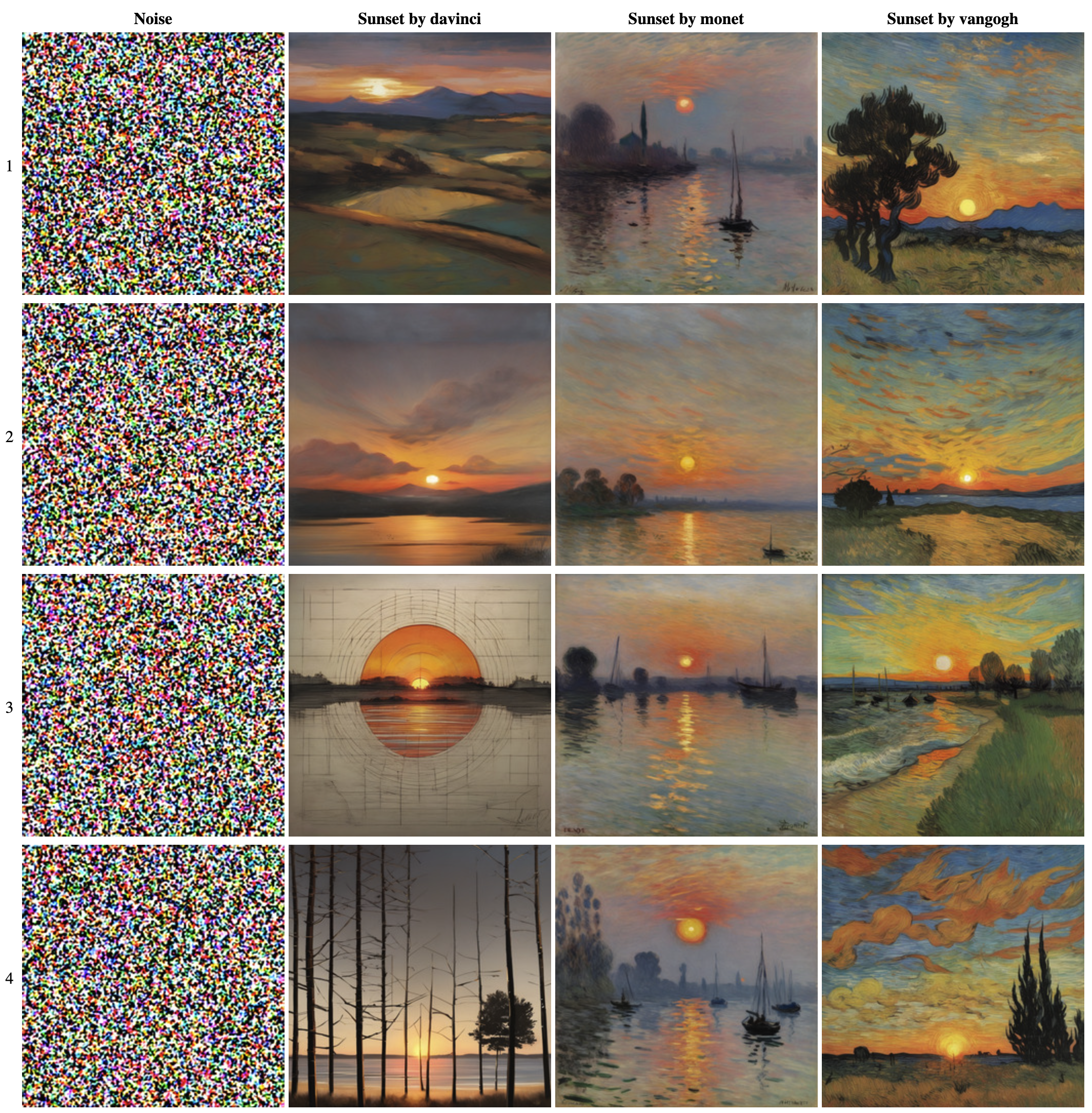

The first experiment was exploring the impact that the Stable Diffusion seed across similar but different prompts: https://lets.m4ke.org/sunsets/

The y-axis is the seed number, the x-axis are different prompts. You can start to see specific features being “defined” in the original noise (specified by seed number)

Next he had a super cool demo of “Latent Offsets”, where you can very quickly navigate and visualize how 1-pixel offsets of the initial noise impacts the image outputs. If you press your arrow keys in a single direction you’ll see what I mean.

Try it out here: https://lets.m4ke.org/offsets/

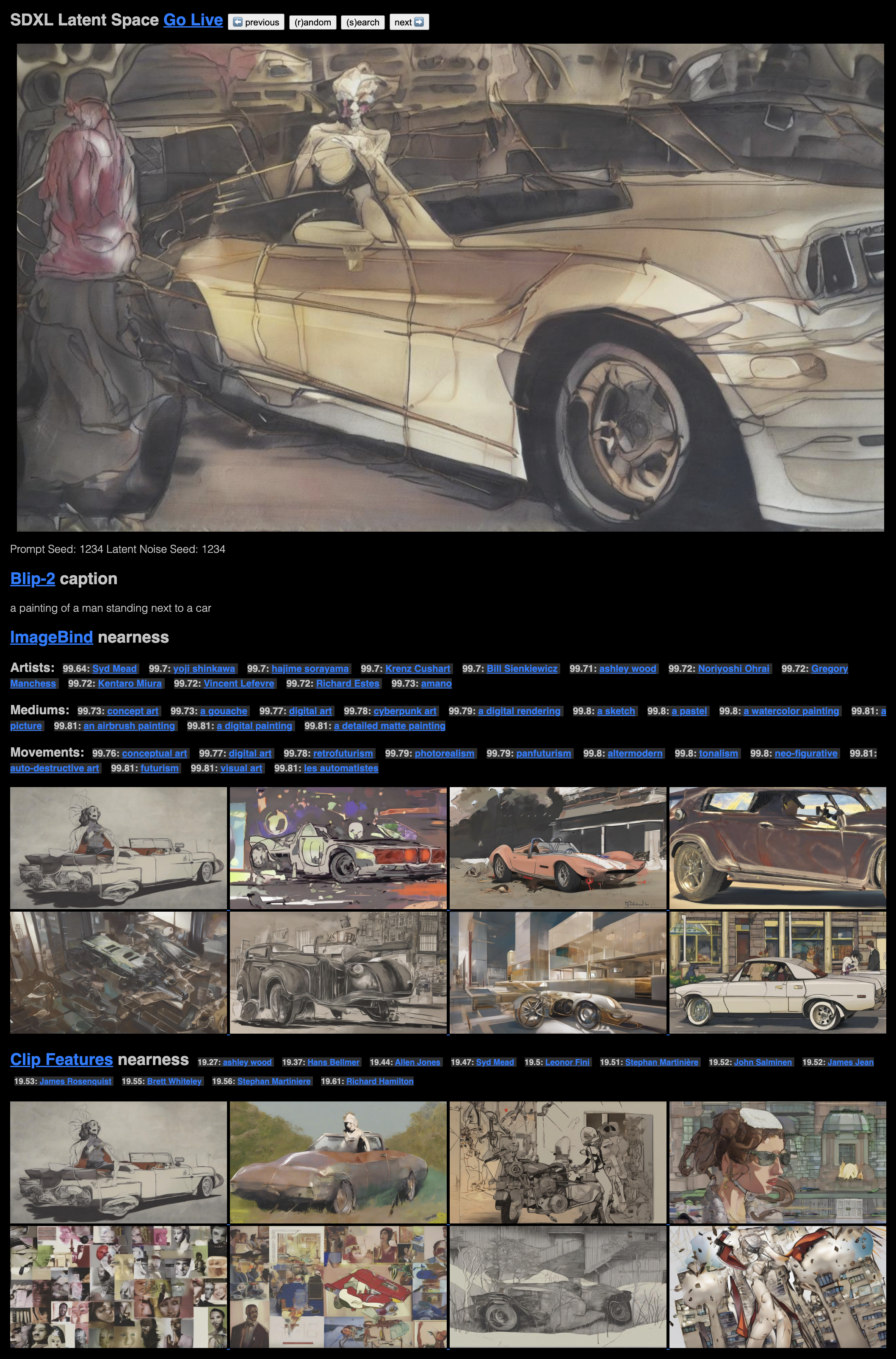

Most recently, he created https://zomg.ai/tv which is sort of like interdimensional cable from Rick & Morty. Every few seconds you will get a fresh new completely strange “dream” of an image from somewhere in the Stable Diffusion latent space.

In the screenshot below, you see the generated frame at the top. Then below you see the blip-2 caption, ImageBind embeddings, and clip features. So you can start with a completely random looking image, and quickly find other images that are similar in embedding space.

You can try this example by going to https://zomg.ai/tv/1234

Then a few weeks ago we had some discussions about how AI agents can traverse the latent space of Stable Diffusion, and tell us when it finds something interesting. Whether it is patterns, styles, motifs, etc.

I sort of love this idea that we can unleash hundreds or thousands of AI agents out on a giant amorphous blob of embedding vectors, and have it find meaningful things for us.

Discovering new words

There was this awesome hackathon project by Joel Simon and Ryan Murdock that I first saw at the Replicate hackathon back in early September.

https://joelsimon.net/new-words.html

The idea was that you could expand the English language by identifying gaps in embedding space between words, and filling the gap with new words. Concepts that exist between 2 words, but don’t already have a word.

I thought this was so clever!

Pointing to the idea that it is possible to find insight or novel ideas between other ideas in embedding space.

Original Inspiration

My original inspiration for starting to get into the world of AI was this conversation with Demis Hassabis on the Lex Fridman podcast.

Throughout the conversation they talk about using AI to make real scientific progress. Employing AI to sort of chip away the edge of human understanding — protein folding, nuclear fusion. Last year DeepMind announced alpha tensor which is a new matrix multiplication algorithm to ever so slightly improve performance of computation. Truly inspiring stuff.

Conclusion

Over the last few months I’ve been seeing these sorts of projects, ideas, and experiments emerge where folks are trying to navigate and traverse high-dimensional embedding space in creative and inspiring ways.

So when I listened to Wolfram’s talk where he introduced the idea of the ruliad and rulial space, my mind exploded 🤯

It’s the same pattern as the Stable Diffusion experiments. We have a high-dimensional space, and if we were able to create AI agents that could randomly walk the rulial space, maybe we could discover alien insights from distant computational universes.

I imagine these sorts of alien insights to be things akin to general relativity or quantum mechanics. What sort of reality cheat codes lie just outside our neighborhood of rulial space?

I admit, this is all very sci-fi. I’m not sure it makes sense to draw lines between these individual pieces of inspiration, but I can’t help but feel there’s something interesting here.